Hi there,

I have my own custom hosted zrok on a well-spec’d machine. I use Zrok to get around my CG-NAT that prevented me from hosting my own websites, gameservers, and Plex.

Unfortunately, we noticed problems quickly. Plex seems to completely destroy the network share if a user tries to request too quickly or more than one user watch content at the same time. It errors with “error: timeout waiting to put message in send queue: context deadline exceed”.

This happens not only on Plex, but other services like Jellyfin. Our network is not saturated, in fact this can occur when using minimal bandwidth. The network share was created on the host machine of Plex, using “zrok reserve public https:// IP:32400 -n plex”, then “zrok share reserved plex”.

It functions well for things like music (and somehow high-speed downloads), but the moment something like video streams come through, the entire network share seems to get “stale” over time. I restart the share, and it works for a little bit, but fails again over time and requires a restart. The entire web share will crash with a “bad gateway” zrok message.

Is there any advice anyone has? Alternatives? Maybe a different command flag? If this doesn’t work, I’m not sure what to do. I’ve invested so much time and money into making all this work and I’m close to giving up tbh.

Thanks.

That error indicates the TCP connection between the zrok client and the Edge Router in the zrok network is “backing up”, that is, the connection isn’t sending the data quickly enough until it reaches a point where the application can’t buffer any more. If it is happening over time, my guess would be a burst of errors that are collapsing the TCP window on the connection.

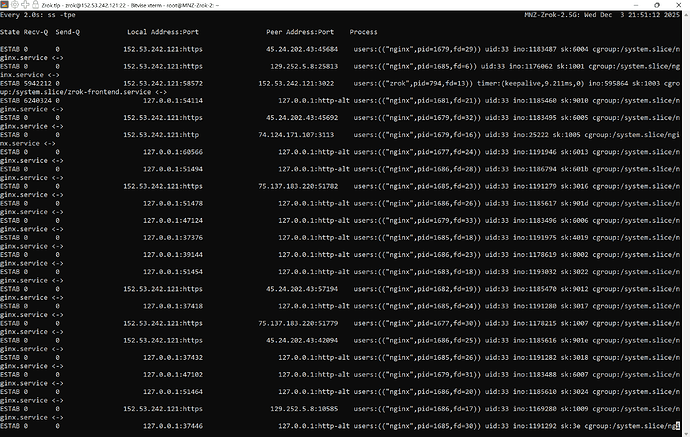

You can try some basic monitoring on the zrok hosting system like ‘watch “ss -tpe”. That will show you the connections and the send and receive queues every 2 seconds. You should see the send queue increase at the same time you see these errors, meaning the issue is in the host’s TCP stack. You could also take a tcpdump of the connection and review it, if you understand that process. I often set up a rolling log set and only capture the headers to reduce the size of the capture file, but be able to see the errors, window sizes, etc.

sudo tcpdump -s <CAPTURE LENGTH> -W <FILECOUNT> -C <FILESIZE in MB> -w <FILENAME> '<FILTER>'

I usually use 10 files, 64 byte capture length, and 100MB files. Then I grab the file that covers the time I had the problem and look at it via Wireshark.

You might also try tuning the OS TCP stack, there are a number of articles about settings available on the web, depending on the host system. I’m not familiar enough to know if there are specifics Plex suggests.

Try at least the watch, and see what you see. If the send queue is backing up, as I would suspect, try the optimizations. If you know packet analysis, that’s the best, of course, to really understand what is happening at that level.

Hi @SynthwaveFox.

Have you tried enabling the superNetwork flag? See: openziti/zrok v1.0.8 on GitHub

This enabled multiple connections, including a separate connection for control data, and moves flow control to the endpoint. This removes some bottlenecks where user connections could interfere with one another.

Let me know if you see a difference,

Thank you,

Paul

I’ll be honest, I have not a clue what I’m looking at here with the watch command. I did enable the superNetwork as @plorenz has said, and we had a slight improvement. Posters of files were able to load in the web UI while a user streamed, but soon the share again fell to a halt.

If it provides any information or issues with the configuration, @mike.gorman, the Zrok share for Plex is running in a TrueNAS LXC (Ubuntu container inside of TrueNAS), sharing the machine’s own local IP with Plex’s port (since localhost wouldn’t work in a container). TrueNAS is running Plex in Docker, so I cannot simply run zrok on the same server, only the same physical machine.

I was expecting to see nonzero values in the queues, and significantly so. (small double digits are normal, depending on the timing of the check) Is the error about putting in send queue still present after the superNetwork flag?